Method Overview

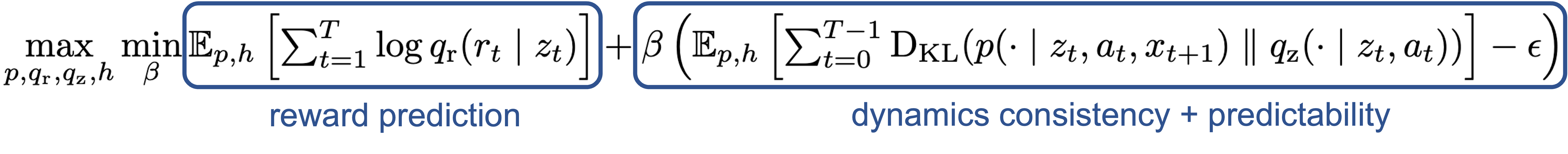

Representation Learning

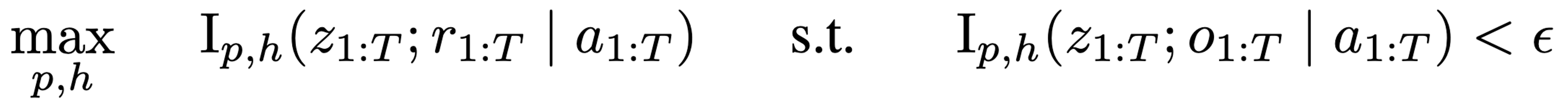

RePo learns a minimally task-relevant representation by optimizing an information bottleneck objective. Specifically, we learn a latent representation that is maximally informative of the current and future rewards, while sharing minimal information with the observations.

This objective is intractable, so we derive a variational lowerbound. The final form consists of two terms. The first term encourages the latent to be reward-predictive, and the second term enforces dynamic consistency. Taking a closer look at the second term reveals that it also incentivizes the representation to discard anything that cannot be predicted from the current latent and action. This includes spurious variations that are often present in the real world.

We parametrize the models using a recurrent state space architure and optimize the objective using dual gradient descent.

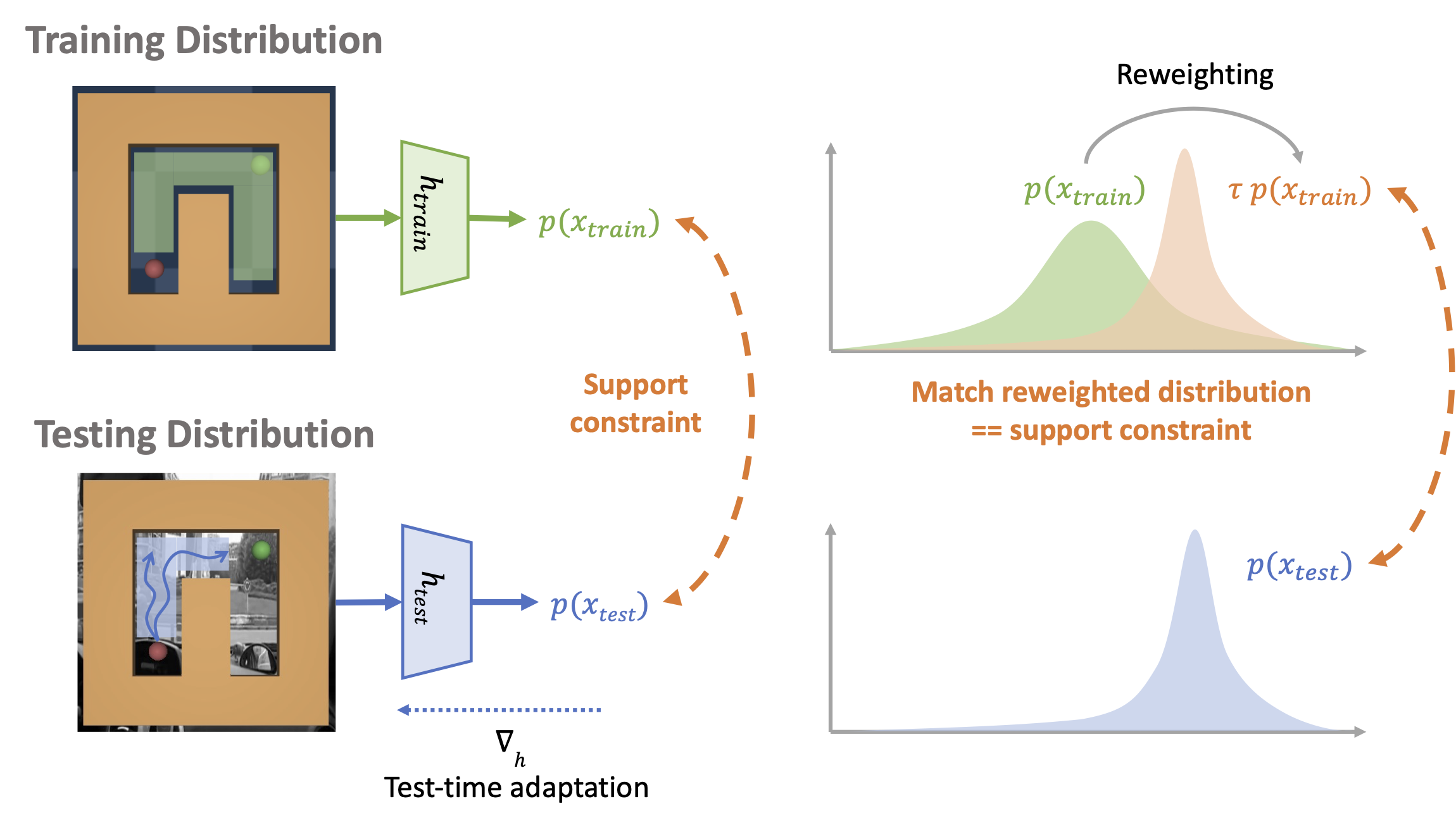

Test-time adaptation via support constraint

While the representation is resilient to spurious varitions, it is not invariant to significant distribution shift. This is because the visual encoder does not generalize. We thus appeal to test-time adaptation to align the encoder while keeping the policy and model frozen. Standard unsupervised alignment methods employ a distribution matching objective, which are not suitable for online adaptation due to exploration inefficiency. We propose to instead match the support of the test-time distribution of visual features with the training time distribution.